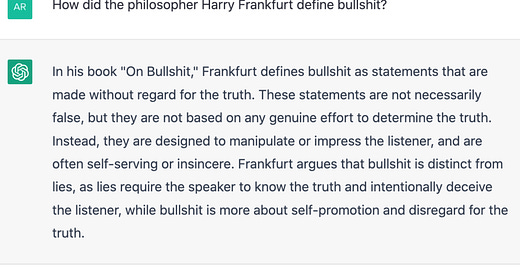

The philosopher Harry Frankfurt defined bullshit as speech that is intended to persuade without regard for the truth. By this measure, OpenAI’s new chatbot ChatGPT is the greatest bullshitter ever. Large Language Models (LLMs) are trained to produce plausible text, not true statements. ChatGPT is shockingly good at sounding convincing on any conceivable topic. But OpenAI is clear that there is no source of truth during training. That means that using ChatGPT in its current form would be a bad idea for applications like education or answering health questions. Even though the bot often gives excellent answers, sometimes it fails badly. And it’s always convincing, so it’s hard to tell the difference.

Yet, there are three kinds of tasks for which ChatGPT and other LLMs can be extremely useful, despite their inability to discern truth in general:

Tasks where it’s easy for the user to check if the bot’s answer is correct, such as debugging help.

Tasks where truth is irrelevant, such as writing fiction.

Tasks for which there does in fact exist a subset of the training data that acts as a source of truth, such as language translation.

Let’s dive in. First the bad news, then the good.

Accuracy is critical in many applications

ChatGPT is the best chatbot released so far. It has delighted users over the last week by generating fantastically weird text, such as an explanation of how to remove a peanut butter sandwich from a VCR... in biblical verse.

But people are also excited about more serious applications, such as using it as a learning tool. Some are even predicting that it will make Google redundant. Yes, ChatGPT is often extremely good at answering questions. But the danger is that you can't tell when it's wrong unless you already know the answer. We tried some basic information security questions. In most cases, the answers sounded plausible but were, in fact, bullshit. And here’s what happened with more complex questions:

Another trope about ChatGPT and education: universities are doomed because ChatGPT can write essays. That's silly. Yes, LLMs can write plausible essays. But the death of homework essays is a good thing for learning! We wrote about this a month ago, and nothing has really changed.

What about search? Google's knowledge panels are already notorious for presenting misinformation authoritatively. Replacing them with an LLM could make things much worse. A paper by Chirag Shah and Emily Bender explores how things could go wrong if we replace search engines with LLMs.

The fact that these models can’t discern the truth is why Meta’s Galactica, an LLM for science, was an ill-conceived idea. In science, accuracy is the whole point. The backlash was swift, and the public demo was pulled down after three days. Similarly, correctness and reliability are everything if you want to use an LLM for answering health-related queries.

But aren’t these models improving quickly?

Of course. But their ability to sound convincing is getting better just as quickly! So we suspect it's getting harder for even experts to spot mistakes.

In fact, models such as Galactica and ChatGPT are great at generating authoritative-sounding text in any requested style: legalese, bureaucratese, wiki pages, academic papers, lecture notes, and even answers for Q&A forums. One side effect is that we can no longer rely on the form of a text to gauge trustworthiness and legitimacy.

StackOverflow found out the hard way. On the website, users answer programming questions to earn points, and points bring privileges like fewer ads and access to moderator tools. After ChatGPT was released openly to the public, the Q&A forum got thousands of incorrect answers generated using the LLM. But because the answers were written in the right style, they had to be vetted by experts before they could be removed. Within a week, the company had to ban answers generated using ChatGPT to reduce the number of plausible-sounding incorrect answers.

Unless the accuracy of LLM responses can be improved, we suspect that the set of legitimate applications will remain relatively circumscribed. Note that GPT-3 is 2.5 years old. We're told the field is progressing every week, so that's like centuries. When it was released, people confidently predicted that there would be a “Cambrian explosion” of applications. But so far, there have been zero mainstream applications — except GitHub Copilot, if you count programming as mainstream.

The accuracy issue is not necessarily hopeless. Intriguingly, LLMs seem to acquire some capacity to discern truth as a byproduct of learning to sound convincing. When researchers asked an LLM to evaluate its own proposed answers for accuracy, it did far better than random chance! Work is underway to integrate this ability into the default behavior of chatbots, so that accurate answers are more likely to be produced.

Meanwhile, here are three kinds of tasks for which LLMs are already useful.

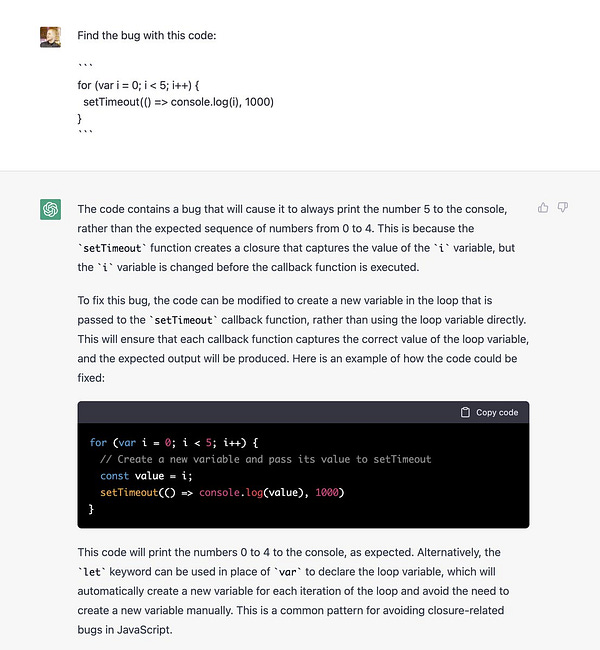

Coding: the user can access ground truth

Debugging code is an application where programmers, especially novices, could benefit from LLMs. Note that once a bug is pointed out, it is generally easy to verify, so the fact that the bot’s answers might sometimes be wrong isn’t as much of a concern.

Generating code is a tricker story. In theory, the user can verify that automatically generated code is free of bugs (perhaps with the help of LLMs). But it is unclear whether this would be faster than manually writing the code. Security bugs are a particularly serious concern. A study last year found that Copilot generated insecure code 40% of the time. They don’t compare this figure to human programmers or offer an opinion on whether and when Copilot use is appropriate, but it is clear from the result that caution is warranted.

Copilot is intended to increase the productivity of experts. What about users who can’t code — can they use AI tools to generate simple scripts? There’s a lot of promise here, too. Here’s one small experiment:

![Write a Python3 program that receives a list of strings on standard input, one per line, reverses each string, and prints them to standard output.

Here is a simple program that will receive a list of strings on standard input, reverse each string, and print them to standard output:

Copy code

# Read the strings from standard input

while True:

try:

line = input()

except EOFError:

break

# Reverse the string and print it

print(line[::-1])

To use this program, you can simply copy and paste the code into a file and run it with a Python interpreter. For example, you could save the code to a file called reverse.py and run it like this:

Copy code

python3 reverse.py

You will then be able to enter strings, one per line, and the program will reverse each string and print the result. When you are finished, you can press CTRL+D (on Linux or macOS) or CTRL+Z (on Windows) to indicate that there are no more strings to be entered, and the program will exit.](https://substackcdn.com/image/fetch/w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFjJRG65UUAE93NR.jpg)

![That didn't work. It reversed only the last string, not all the strings. Could you try again?

Here is a modified version of the program that will reverse each string and print them to standard output:

Copy code

# Read the strings from standard input

lines = []

while True:

try:

line = input()

except EOFError:

break

lines.append(line)

# Reverse each string and print it

for line in lines[::-1]:

print(line[::-1])

In this version, we store each line in a list, then reverse the list and iterate over it in reverse order. This way, we ensure that each string is reversed before it is printed.](https://substackcdn.com/image/fetch/w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFjJRHhIUYAA2FvA.jpg)

![That didn't work. We want to reverse each string but print the list of strings in the same order. We don't want to reverse the list of strings. Could you try again?

Here is a modified version of the program that will reverse each string but print the list of strings in the same order:

Copy code

# Read the strings from standard input

lines = []

while True:

try:

line = input()

except EOFError:

break

lines.append(line[::-1])

# Print the reversed strings

for line in lines:

print(line)

In this version, we store each reversed string in a list, then iterate over the list in the original order and print each reversed string. This way, we ensure that each string is reversed before it is printed, but the list of strings is not reversed.

arvindn@cs.princeton.edu

That worked. Good job.

Thank you! I'm glad that I could help. Let me know if you have any other questions.](https://substackcdn.com/image/fetch/w_600,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fpbs.substack.com%2Fmedia%2FFjJRH9WVEAA73T9.jpg)

The use of LLMs for code generation is an active area of research. There’s a lot of room for improvement in terms of increasing the correctness of the generated code and decreasing the frequency of bugs. This is an exciting area to watch.

Entertainment: truth is irrelevant

ChatGPT has been a source of entertainment over the last week. From writing jokes on specific personalities to explaining algorithms in the style of fast-talking wise guys, people have found many creative uses for the tool. But could we use ChatGPT for more ambitious projects, such as writing fiction?

LLMs are quite far from being able to produce long pieces of text, such as entire novels, because they can only store a small number of tokens at a time. Still, authors and researchers are experimenting with them to generate ideas, expand on their prompts, and change the style of a text (e.g., "rewrite this text to be more Dickensian"). Interactive fiction games like AI Dungeon use LLMs to flesh out storylines using user inputs. We believe there is no fundamental barrier to continuing improvements in this area.

Similarly, text-to-image and image-to-image tools are useful for entertainment because creators can tune the prompt till they find an image they like. The latest app to make waves is Lensa, which creates portraits in various styles once the user uploads a few selfies. Its backend uses Stable Diffusion, an open-source image generation model from Stability AI.

Before we get carried away by this potential: racist, sexist, and biased outputs are still a problem for all generative models, including ChatGPT. The model includes a content filter that declines inappropriate requests, which works well enough to feel like a big improvement over previous tools, but there is still a long way to go.

Translation: exploiting a lurking source of truth

Remarkably, GPT-3 performs roughly on par with special-purpose language translation models, and ChatGPT likely does as well. The likely reason is that it can exploit ground truth in the corpus (which consists, to a first approximation, of all text on the web). For example, there are web pages that are translated into multiple languages. Of course, during training, no explicit labels are provided to tell the model which texts correspond to each other, but presumably, the model can figure this out automatically1.

It’s not obvious why a chatbot would be a better tool for translation than existing tools like Google translate if their performance is equivalent. One possibility is that in a conversation between two speakers of different languages, a tool like ChatGPT could play the role of an interpreter, the advantage being that a tool used within a conversation can keep track of the dialog. This would allow it to rely on context and perform much more effectively and with far less awkwardness for the users.

Conclusion: too early to tell if it will be transformative

Generative AI releases tend to look impressive based on cherry-picked examples that go viral. But that's not the full picture. For many applications, even a 10% failure rate is too high. There seem to be a fairly limited set of use cases where the lack of a source of truth isn’t a deal breaker. While these uses are still tremendously exciting, it’s far from obvious that people will soon be using chatbots in their everyday lives — for learning, or as a search replacement, or as a conversation partner.

Meanwhile, we’ve seen the first prominent use of LLMs for misinformation (on Stack Overflow). Of course, spammers were already using GPT-3 for search engine marketing and are delighted to get their hands on ChatGPT. But just as the predictions of transformation are overblown, we don’t agree with claims that the web will soon drown in an ocean of misinformation.

We look forward to how people will use LLMs creatively as much as we cringe at the hype and the usual self-serving AGI talk.

Suppose we have two separate datasets of texts in different languages. We don’t even require that documents in one be translations of documents in the other. If we build separate word embeddings of those two languages, those embeddings will approximately be linear transformations of each other! And it is possible to learn this mapping in an unsupervised way. The details of what’s happening in LLMs are quite different, but this shows that, in principle, an explicitly labeled parallel corpus isn’t required for translation.

A typical English user of ChatGPT would ask a question about a subject that is well known to him from American or British culture, find some mistaken detail in ChatGPT’s response, and say: “There, I am better than you!” OK, so, ChatGPT would get a C for that question, while this English user would get an A or B. What if you now ask ChatGPT a similar question, but related to a foreign culture, (let’s say) Indian or Indonesian. ChatGPT would likely still get a C for that question, while this English user would likely get an E or F. What if you now ask ChatGPT a similar question, but written in a foreign language, (let’s say) Vietnamese, and which the respondent has to answer in French. ChatGPT would still get a C, while this English user won’t understand a single word of the Vietnamese question, let alone try to answer it in French. The point is: users are focusing on the 0.3% of situations where they might get a higher grade, and being completely obtuse about the 99.7% where ChatGPT is irreversibly outclassing them and outperforming them.

I enjoy your post(s) and agree with most of it. However, I do wonder if your perspective on learning is focused on high level education and/or students that are ambitious and genuinely want to learn. I am an associate professor at a business school, and have tested my last 3-4 years of exam question on the bot. It generally performs quite poorly and fails the clear majority, yet would pass some of the questions. It might even get a C on one of the questions (out of 25 or so). So, it is clearly not a worry in the sense that it can lead students to a high performance nor genuine learning. I'd also argue it shouldn't be able to fully answer questions at the master's level. On the other hand, at the bachelor level of a an average university, this tool can (and will soon) be able to do just well enough to pass. This can be partially solved by making exams onsite and disallowing the use of the internet. Yet, not everything happens on-site. The positive angle is that the bot helps assessing if an exam question should be used or not - but a substantial number of students, who just want a diploma, will exchange tedious learning activities with letting the bot answer for you. And in will work, at times.